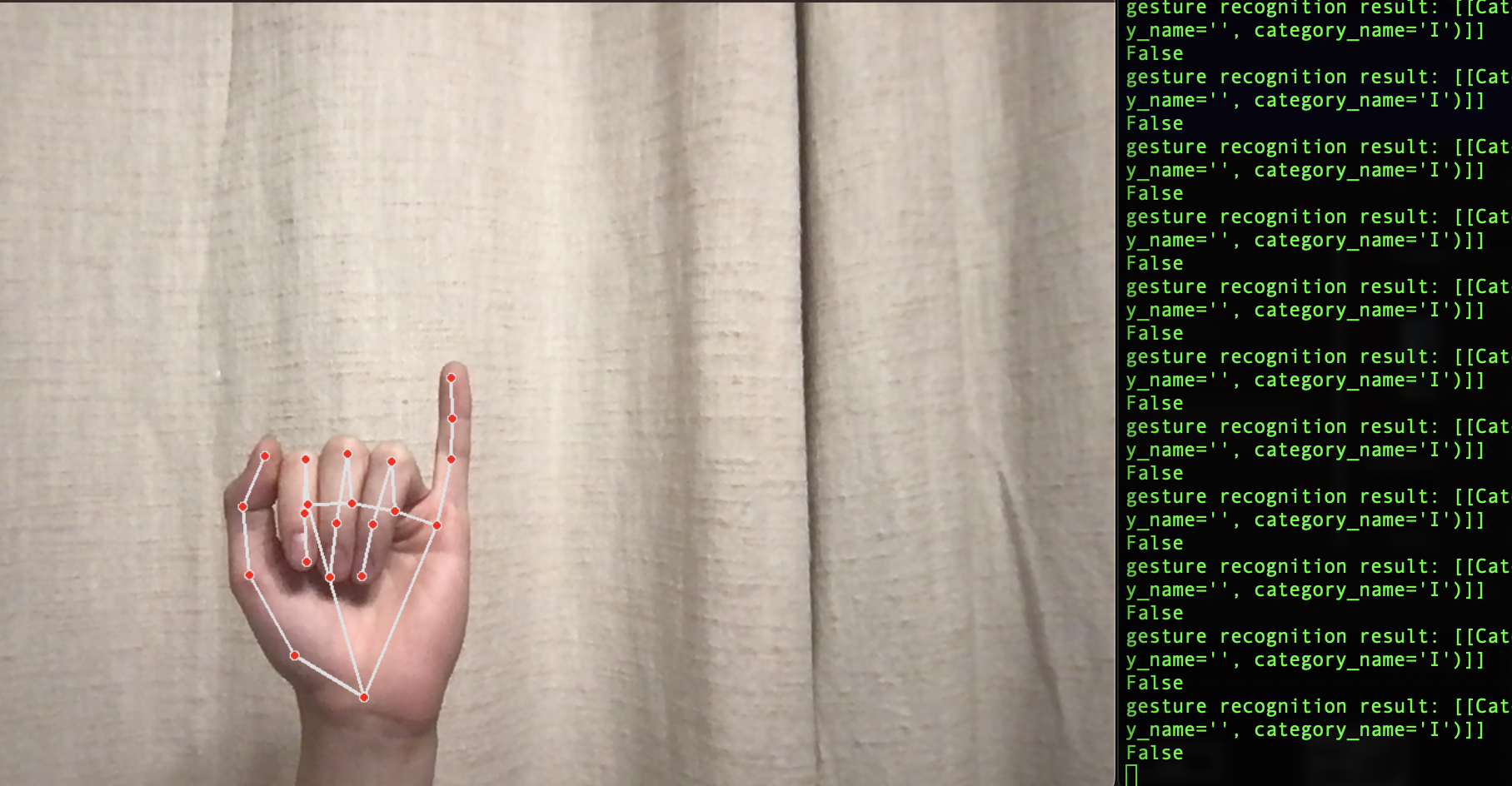

Our team is developing a refined computer vision model to accurately interpret the intricate hand and body movements of American Sign Language (ASL). This advanced technology meticulously analyzes the subtle nuances of ASL signs, enabling real-time translation into standard English text. By bridging the communication gap between the deaf and hearing communities, our model enhances inclusivity and facilitates seamless interactions across linguistic barriers.

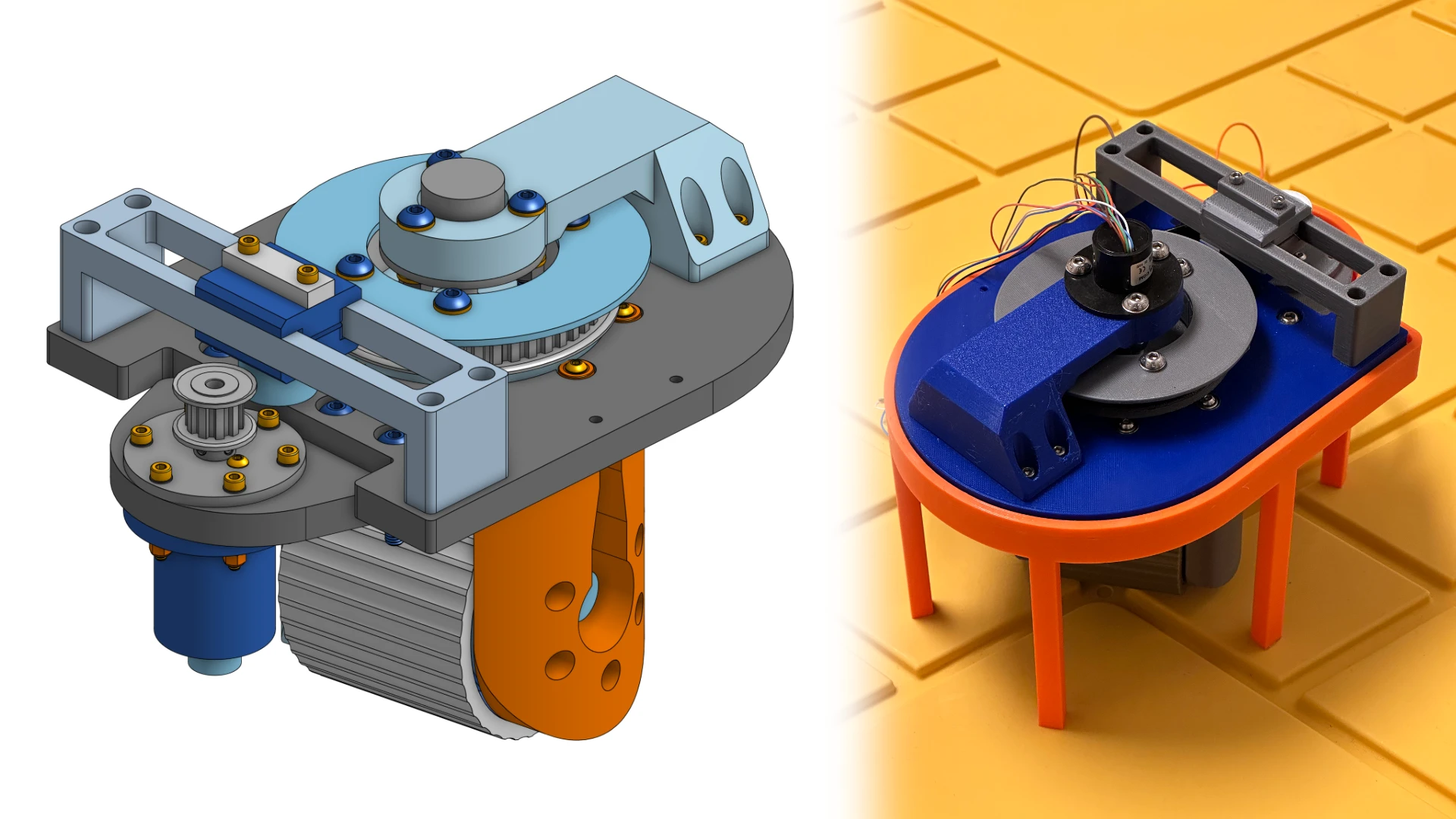

Modbot is our autonomous modular robot project, designed to have swappable modules and implement both high-level and low-level control algorithms. We can use it to learn about embedded software, practical applications of control theory, and modular systems (both software and hardware), and optimization. Continuously improving modbot’s capabilities enables us to teach ourselves these concepts as well as help the robot learn and adapt to complex situations like crowds or rough terrain.

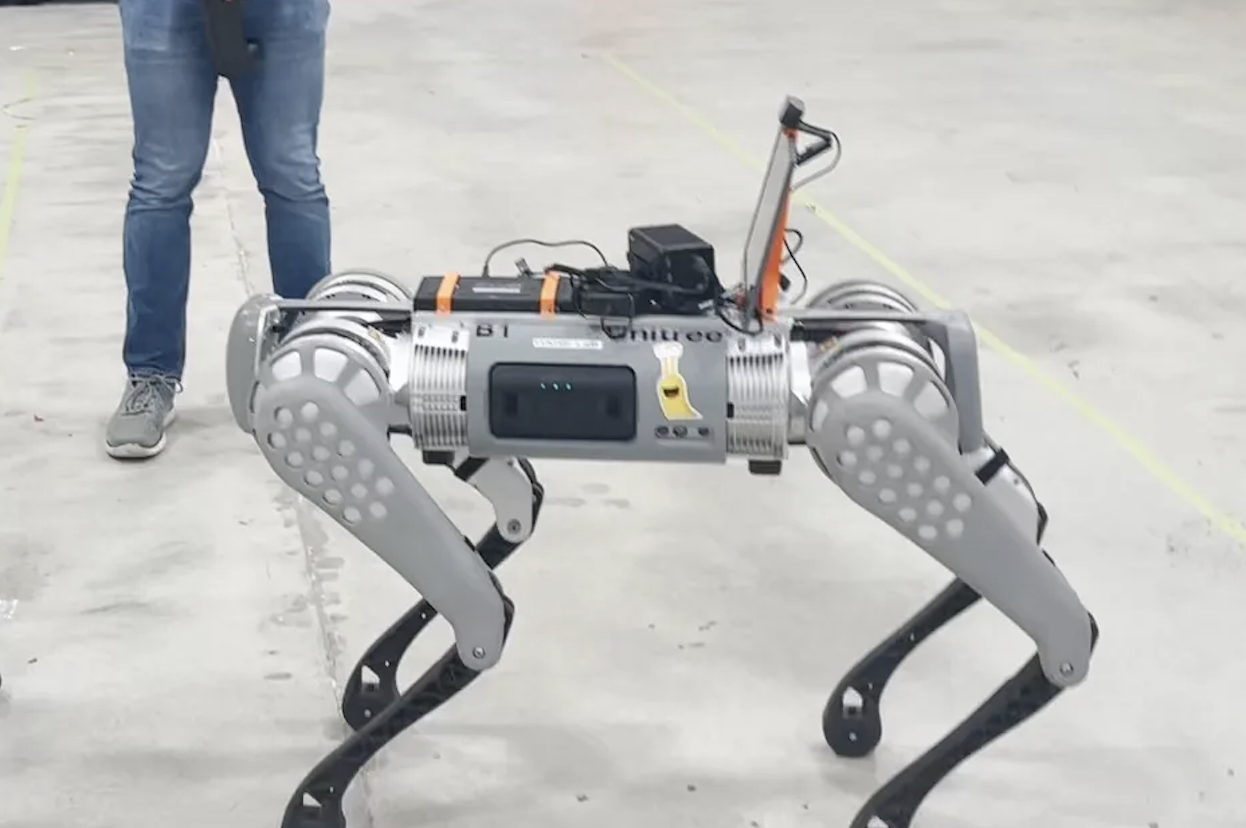

Autoslug is working with HARE Labs (led by Professor Steve McGuire) on a project to create a control system for the Unitree B1. The B1 is a robotic dog with 12 motor actuators and an array of sensors including 5 depth cameras, IMU sensors, GNSS, etc. We hope to apply control theory, simulation, human robot interaction, and AI to allow the dog to interact with humans based on hand gestures.